These days I had to troubleshoot poor VMware vSphere SAN transport mode performance during backup with Veeam. Before SAN mode, NDB transport mode was used. NBD outperformed SAN mode totally!

Here are some facts about the environment.

Environment

- VMFS volume are hosted by two HPE 3PAR arrays in a synchronous replication configuration (Remote Copy) with Peer Persistence.

- ESXi hosts are running on different versions.

- Backup (here: Veeam Backup and Replication) is installed on a dedicated physical host. This host is connected with 10G LAN and a single FC uplink to one out of two fabrics.

Backup performance

The used backup mode in this environment was NDB transport mode. This was because the physical host wasn’t equipped with a FC HBA. To improve backup performance a HBA was installed. To run direct SAN backup, a few configuration steps are necessary:

- Add MPIO feature to backup server,

- Configure MPIO for 3PAR

- by running:

mpclaim -r -I -d "3PARdataVV"

- by running:

- FC switch zoning,

- Exporting all LUNs to backup host,

- Rebooting the server.

After that we saw a miserable poor SAN transport mode performance:

- Observed Backup throughput for different VMs from < 1MB/sec (!) to 60MB/sec.

- Backup job lasts about 10 minutes before the first byte was transferred.

Troubleshooting

- No errors on any layer (Windows host, FC Switches, 3PAR arrays).

- All SFP metrics on FC switches were OK.

- Arrays did not have any other inexplicable performance issues.

- Antivirus software was disabled during testing.

Solution

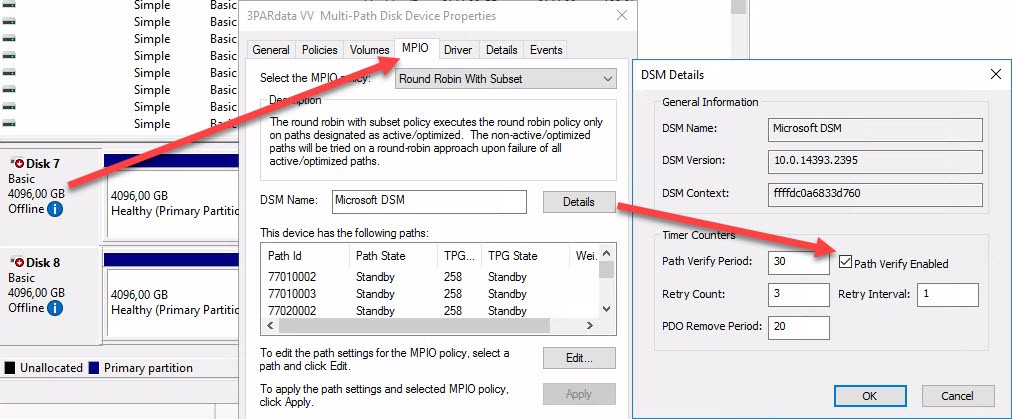

Fortunately the solutions is quite simple. The Microsoft MPIO feature Path Verification has to be enabled. This can be done in two ways:

- Per Disk

- Open Disk Management –> open properties of a disk –> Select tab MPIO –> press Details –> enable Path Verify Enabled –> Press OK.

- This has to be done for each 3PAR Disk. The Advantage here is, this can be done online.

- Globally

- Run PowerShell command:

Set-MPIOSetting -NewPathVerificationState Enabled - To check current settings, run:

Get-MPIOSetting - After a reboot, all disks has feature enabled.

- Run PowerShell command:

Notes

- This issue is not related to Veeam Backup & Replication. Each backup solution that uses SAN mode can suffer from bad performance here.

- With Path Verification enabled, we could increased backup throughput for a SSD located VM from about 60 MB/sec to 600MB/sec.

- In HPE 3PAR Windows Server2016/2012/2008 Implementation Guide you see, path verification has to be set in a Peer Persistence environment. Fact is, that I did not need this up to now for Windows backup hosts. In such a situation Windows just reads from 3PAR volumes. But it is really necessary, when Windows writes to 3PAR Peer Persistence LUNs.

- In my opinion this could happen at Primera arrays too.

- To create the right 3PAR/Primera Peer Persistence claiming rule for ESXi hosts see here.