A new feature in vSphere 7 is the ability to configure a VMkernel port used for backups in NBD (Network Block Device) respectively Network mode. This can be used to isolate backup traffic from other traffic types. Up to this release, there was no direct option to select VMkernel port for backup. In this post I show how to isolate NBD backup traffic in vSphere.

Configuration

It is quite simple to configure backup traffic isolation. With vSphere 7 there is a new service tag for Backup: vSphere Backup NFC. NFC stands for Network File Copy. By selecting this, vSphere will return the IP address of this port when the backup software asks for ESXi hosts address.

So all you need to do is to add a new VMkernel port, enable Backup service and set IP and VLAN ID. Host does not need to be rebooted. Now NBD backup traffic will be routed through this port.

How does it look like

For verification there are a few options. You can check log file or monitor ESXi network throughput. On my demo ESXi host, I created a VMkernel port vmk2 with backup tag. IP of this port is 10.10.250.1.

Log file

Probably your backup software create logs for each backup process. In case of VBR, a few log files are created. To get the information, what IP is used for backup traffic, you can search in log files: Agent.job_name.Source.VMDK_name.log in directory C:\ProgramData\Veeam\Backup\job_name of VBR server.

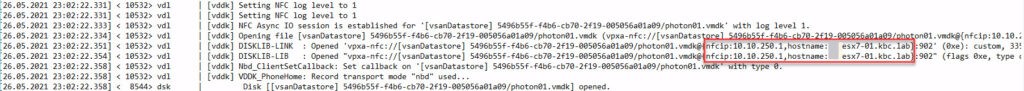

In the appropriate log file, you see IP address, returned from vCenter to connect for backup traffic. Here is a screenshot with enabled backup tag.

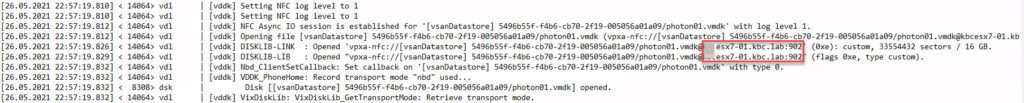

Compared to no tagged network port:

Monitor ESXi network

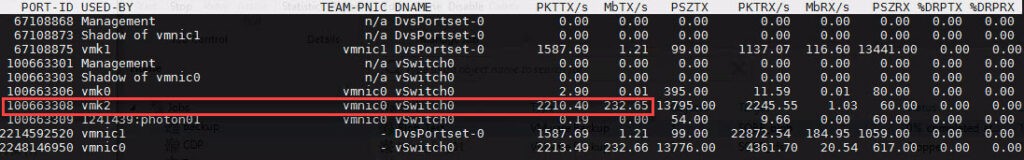

For realtime-monitoring I prefer esxtop in ESXi console. In this screenshot you see backup traffic on vmk2:

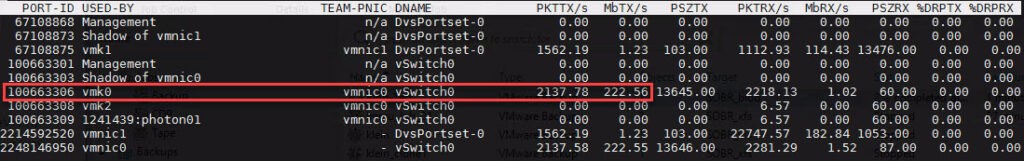

Compared to no tagged port for backup:

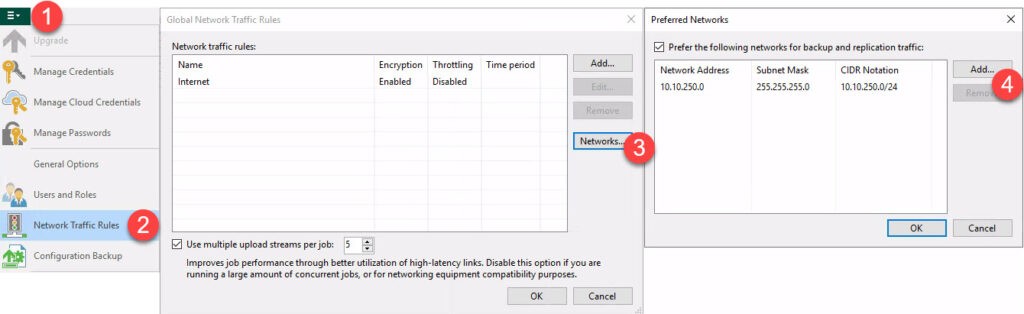

Veeam preferred Network

Question may arise, if it is possible to select ESXi VMkernel port with VBR natively. For this, I tried to use Preferred Networks to define my network of choice.

Answer: this does not work, backup traffic is still routed through the default management port of the host.

What about other way round: can preferred networks prevent the selection via tagging VMkernel port? The answer here is also no. Even if management network is configured as preferred, tagged port is used.

For more information - for example command line options - visit

https://vnote42.net/2021/05/31/how-to-isolate-nbd-backup-traffic-in-vsphere/

[update; 20.07.2021]

It has turned out that there are issues with isolated backup traffic that is routed. In this situation, ESXi is not working as expected. I could re-create this situation in my lab; behavior was just strange!

According to VMware Support this will be re-viewed internally. So for now: do not use this feature, if you have to route the isolated backup traffic.